Sentiment analysis tutorial in Python: classifying reviews on movies and products

Introduction

Sentiment analysis in conjunction with machine learning is frequently employed to gain insight into how positive or negative a target group feels about a particular entity, such as a movie, product line or political candidate. The key method to uncovering this is collecting samples of text from the target group (be it tweets, customer service inquiries, or, in this tutorial's case, product reviews). It is a key part of natural language processing. This tutorial will guide you through the step-by-step process of sentiment analysis using a random forest classifier that performs pretty well. We will use Dimitrios Kotzias's Sentiment Labelled Sentences Data Set, hosted by the University of California, Irvine. It contains movie reviews from IMDB, restaurant reviews from Yelp import and product reviews from Amazon. This guide will elaborate on many fundamental machine learning concepts, which you can then apply in your next project. If you follow along with the code examples, you will have a very useful, insightful (and fun) new technique at your disposal. ### Structure of tutorial This tutorial is divided into the following sections: 1. Downloading Libraries with pip 2. Accessing the Dataset 3. Summary Statistics 4. Vectorization: Translating from English to Computer-Speak 5. Feature Selection 6. Splitting the Dataset: The Train and Test Sets 7. The Classifier 8. Hyperparameter Optimization: Maximizing Performance 9. Results Analysis ### Downloading libraries with pip Machine learning and data science can get complicated very fast: machine learning algorithms are often long and convoluted, and organizing data in a reliable manner can become a headache. Fortunately, much of the groundwork is already established via Python libraries. Using these libraries, you can build, train, and deploy a neural network in a few lines of code, rather than hundreds. To make things easier on ourselves, we'll be using a number of libraries: * pandas * nltk * string * collections * sklearn * scipy If, while following along with the code, you try importing one of these modules and receive an error, ensure the module has been installed by typing pip install modulename in the shell/command line, for instance: {docker} pip install pandas pip install scipy Alternatively, you can use a distribution of Python such as Anaconda, which will have many of these libraries and more pre-installed. All this being said, I recommend you take some time later to try doing some of these things from scratch, particularly, writing the code for some machine learning algorithms (neural networks and decision trees, for example). Don't worry about doing this just yet - for now, this tutorial will suffice. Note that nltk's stopwords list may not come pre-downloaded with the package. If you have trouble importing the stopwords list, type this once into a Python shell or type this in your Python file: {python} import nltk nltk.download('stopwords')

Accessing the Dataset

We will be using Dimitrios Kotzias's Sentiment Labelled Sentences Data Set, which you can download and extract from here here. Alternatively, you can get the dataset from Kaggle.com here

The dataset consists of 3000 samples of customer reviews from yelp.com, imdb.com, and amazon.com. Half of them are positive reviews, while the other half are negative. You can read more about the data set at either of the posted links.

Once you've downloaded the .zip file and extracted the contents to a location of your choosing, you'll need to read the three .txt files in the "sentiment labelled sentences" folder into your Python session/IDE. (If you're looking for a lightweight, user-friendly editor that's used by many data scientists, I recommend Jupyter, which comes pre-shipped with Anaconda Navigator).

First we read the data into Python:

def openFile(path):

#param path: path/to/file.ext (str)

#Returns contents of file (str)

with open(path) as file:

data = file.read()

return data

imdb_data = openFile('C:/Users/path/to/file/imdb_labelled.txt')

amzn_data = openFile('C:/Users/path/to/file/amazon_cells_labelled.txt')

yelp_data = openFile('C:/Users/path/to/file/yelp_labelled.txt')

Now that we have the data loaded in the Python kernel, we need to format it into a usable structure.

datasets = [imdb_data, amzn_data, yelp_data]

combined_dataset = []

# separate samples from each other

for dataset in datasets:

combined_dataset.extend(dataset.split('\n'))

# separate each label from each sample

dataset = [sample.split('\t') for sample in combined_dataset]

We now have a list of the form [['review', 'label']]. A label of '0' indicates a negative sample, while a label of '1' indicates a positive one.

This list structure is a good start, but it has the potential to get messy as we interact with the data. Let's transfer the data to a pandas DataFrame, a data-structure with a well-organized format and many useful methods and attributes. This is one of the most popular data analysis packages in Python, often used by data scientists that switched from STATA, Matlab and so on.

import pandas as pd

df = pd.DataFrame(data=dataset, columns=['Reviews', 'Labels'])

# Remove any blank reviews

df = df[df["Labels"].notnull()]

# shuffle the dataset for later.

# Note this isn't necessary (the dataset is shuffled again before used),

# but is good practice.

df = df.sample(frac=1)

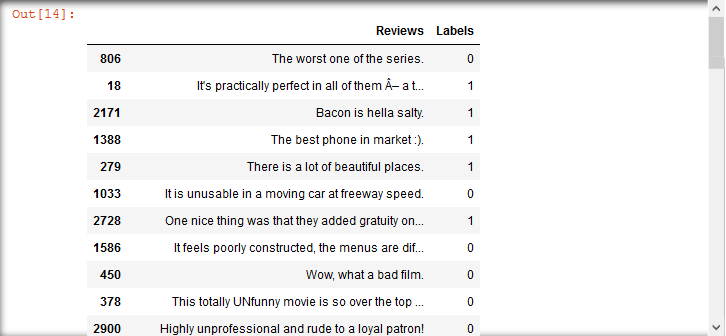

By now, the content of those text files should look something like this:

With our data well-organized, we can start with the actual sentiment analysis and content classification.

Summary statistics

Before you start throwing algorithms at our corpus, it might help if we take a step back and think about patterns that we can see in the data. Doing this before we jump into the machine learning will help us choose an effective strategy from the get-go, and not waste time on things that don't matter.

We are ultimately interested in finding differences between negative and positive reviews - this is what our classifier will be doing, after all. Since we're dealing with text, some good places to look might include

- Sentence length.

- Capitalization

- Usage of punctuation

- Word choice.

We'll consider each of these for both negative and positive classes, and compare the stats. First, lets compute some of this data using list comprehension and add it to our DataFrame.

import string

df['Word Count'] = [len(review.split()) for review in df['Reviews']]

df['Uppercase Char Count'] = [sum(char.isupper() for char in review) \

for review in df['Reviews']]

df['Special Char Count'] = [sum(char in string.punctuation for char in review) \

for review in df['Reviews']]

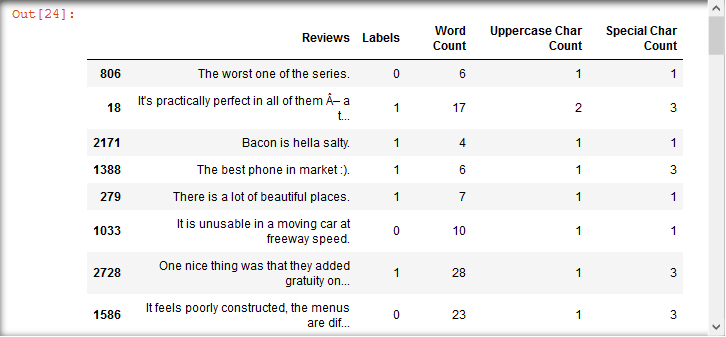

Now we have

We can use the DataFrame's built in statistic methods to get a summary of each of the new columns. Let's look at some of the summary statistics.

Word Count

positive_samples['Word Count'].describe()

count 1500.000000

mean 11.885333

std 7.597807

min 1.000000

25% 6.000000

50% <a href="/routers-number-of-ports/10">10</a>.000000

75% 16.000000

max 56.000000

Name: Word Count, dtype: float64

negative_samples['Word Count'].describe()

count 1500.000000

mean 11.777333

std 8.140430

min 1.000000

25% 6.000000

50% <a href="/routers-number-of-ports/10">10</a>.000000

75% 16.000000

max 71.000000

Name: Word Count, dtype: float64

Continuing in that fashion:

Uppercase Character Count

positive_samples['Uppercase Char Count'].describe()

count 1500.000000

mean 1.972667

std 2.103062

min 0.000000

25% 1.000000

50% 1.000000

75% 2.000000

max 17.000000

Name: Uppercase Char Count, dtype: float64

negative_samples['Uppercase Char Count'].describe()

count 1500.000000

mean 2.162000

std <a href="/routers-number-of-ports/3">3</a>.912624

min 0.000000

25% 1.000000

50% 1.000000

75% 2.000000

max 78.000000

Name: Uppercase Char Count, dtype: float64

Special Character Count

positive_samples['Special Char Count'].describe()

count 1500.000000

mean 2.140667

std 1.827687

min 0.000000

25% 1.000000

50% 1.500000

75% 3.000000

max 19.000000

Name: Special Char Count, dtype: float64

negative_samples['Special Char Count'].describe()

count 1500.000000

mean 2.165333

std 1.661276

min 0.000000

25% 1.000000

50% 2.000000

75% 3.000000

max 14.000000

Name: Special Char Count, dtype: float64

These statics indicate that that there aren't huge difference between the classes - as far as these features go, negative and positive samples are pretty much the same. Let's see if we can spot any differences in the word choice present in either category.

We'll measure term frequency using Python's Counter method, taken from the collections library. First, we'll need to preprocess our data a bit.

from collections import Counter

def getMostCommonWords(reviews, n_most_common, stopwords=None):

# param reviews: column from pandas.DataFrame (e.g. df['Reviews'])

#(pandas.Series)

# param n_most_common: the top n most common words in reviews (int)

# param stopwords: list of stopwords (str) to remove from reviews (list)

# Returns list of n_most_common words organized in tuples as

#('term', frequency) (list)

# flatten review column into a list of words, and set each to lowercase

flattened_reviews = [word for review in reviews for word in \

review.lower().split()]

# remove punctuation from reviews

flattened_reviews = [''.join(char for char in review if \

char not in string.punctuation) for \

review in flattened_reviews]

# remove stopwords, if applicable

if stopwords:

flattened_reviews = [word for word in flattened_reviews if \

word not in stopwords]

# remove any empty strings that were created by this process

flattened_reviews = [review for review in flattened_reviews if review]

return Counter(flattened_reviews).most_common(n_most_common)

Due to the high frequency of words such as "the" and "and", we need a way to view top word counts with these words removed. The nltk library has a pre-made list of common high frequency words, known as stopwords. We'll import that list now.

import nltk

nltk.download('stopwords')

from nltk.corpus import stopwords

We can now access a

pre-made list of stopwords via stopwords.words('english'). Now lets

get a quick snapshot of the two classes, with and without stopwords. First, for the positive class:

Positive Class with Stopwords

getMostCommonWords(positive_samples['Reviews'], 10)

[('the', 989),

('and', 669),

('a', 466),

('i', 418),

('is', 417),

('this', 326),

('it', 311),

('of', 308),

('to', 305),

('was', 257)]

Positive Class without Stopwords

getMostCommonWords(positive_samples['Reviews'], 10, stopwords.words('english'))

[('great', 198),

('good', 174),

('film', 98),

('phone', 86),

('movie', 83),

('one', 76),

('best', 63),

('well', 61),

('food', 60),

('place', 58)]

And now the negative class:

Negative Class with Stopwords

getMostCommonWords(negative_samples['Reviews'], 10)

[('the', 951),

('i', 469),

('and', 460),

('a', 420),

('to', 361),

('it', 354),

('is', 336),

('this', 313),

('of', 313),

('was', 312)]

Negative Class without Stopwords

getMostCommonWords(negative_samples['Reviews'], 10, stopwords.words('english'))

[('bad', 96),

('movie', 94),

('phone', 76),

('dont', 70),

('like', 67),

('one', 67),

('food', 64),

('time', 61),

('would', 57),

('film', 57)]

Right away, we can spot a few differences, such as the heavy use of terms like "good", "great", and "best" in the positive class, and words like "dont" and "bad" in place in the negative class. Additionally, if you increase the value of the n_most_common parameter, you can see words like "not" (which nltk's corpus classifies as a stopword) in use five times as often in the negative class as in the positive class.

After spending a few minutes examining some trends in the data, we can proceed to build a model with some idea of what to focus on, and what not to. A simple classifier that just focuses on word choice seems promising.

Vectorization: Translating from English to Computer-Speak

We've come a long way, and we're now almost ready to start building our classifier. Before doing so, we need to translate our textual data into a form the computer can understand. This is commonly done via a process called vectorization. There is a myriad of vectorization schemes to choose from (I recommend checking out word embedding if you have time, which is more complicated but very cool). We will use the bag-of-words (BOW) model, which, though simple, is a powerful and commonly implemented tool used in industry and academia.

The premise of a BOW is to take a collection of "documents" (your corpus, which can be sentences, paragraphs, or any other string that can occupy an index in a list) and convert them to a "bag" of frequency counts for each "word" encountered in the corpus. The end result is a list of lists, vectors, which can then be passed through a machine learning classifier. For example, the following corpus

['the cat is black',

'I am cat like black cat',

'the emu is black']

Might be converted to the following BOW

array([[0, 1, 1, 0, 1, 0, 1],

[1, 1, 2, 0, 0, 1, 0],

[0, 1, 0, 1, 1, 0, 1]], dtype=int64)

Each column represents the frequency count of a given word, and each row represents the words present in a given document. Here's a mapping of each word to its respective column index to help you understand.

{'am': 0, 'black': 1, 'cat': 2, 'emu': 3, 'is': 4, 'like': 5, 'the': 6}

There are a few implementations of the BOW model, including but not limited to:

Word-Frequency: The previously mentioned method of counting word frequency.

One Hot Encoding: a word appears as 1 if it appears in the document regardless of its frequency, 0 otherwise.

N-gram: Instead of individual words, the occurrence/frequency of groups of words N-units long is measured. This helps to capture the context words are used in.

TF-IDF (Term Frequency - Inverse Document Frequency): rarer words have the potential to outscore more common ones. That's super oversimplified, but it helps paint the picture of why this weighting scheme is useful. In the TF-IDF scheme, all term values are floats in the range [0, 1).

Here's the same corpus of three sentences used above, but vectorized using TF-IDF weighting (values rounded to 3 decimal places to save space):

array([[0. , 0.409, 0.527, 0. , 0.527, 0. , 0.527],

[0.463, 0.274, 0.704, 0. , 0. , 0.463, 0. ],

[0. , 0.373, 0. , 0.632, 0.480, 0. , 0.480]])

The N-gram approach is slightly out of the scope of this tutorial. The TF-IDF method slightly outperforms word-frequency on this dataset (I've already compared them), and is frequently used, so we'll proceed with that. Writing vectorization code from scratch is slightly tedious. Fortunately, sklearn has methods that take care of this for us in a few lines.

from sklearn.feature_extraction.text import TfidfVectorizer

vectorizer = TfidfVectorizer()

bow = vectorizer.fit_transform(df['Reviews'])

labels = df['Labels']

Now, lets see how many unique words (features) we're dealing with

len(vectorizer.get_feature_names())

5159

We've now encountered an interesting machine learning problem. We have about 3000 samples. Divided among those samples are 5159 features. As a general rule-of-thumb, you should try to have at least ten times as many samples as features - generally speaking, the more feature you have compared to samples, the harder it will be for your machine learning algorithm to find strong patterns. That rule-of-thumb puts our minimum dataset size at 51,590 samples.

While creating a bigger dataset is almost always better, it is often infeasible to do so, as the process of gathering (and then labeling) data is both time-consuming and financially taxing. So, rather than increase the number of samples, we can decrease the number of features to achieve the magic 10:1 ratio. There are a several processes and tools we can use to do so. Among the simplest is statistical feature selection.

- Tip: the aforementioned guideline is just a (very loose) rule-of-thumb; ultimately, the nature of the data always determines what the "minimum" ratio should be. Also remember that less features is not always better. Trial-and-error, experimentation, and statistics are your friend here. For this dataset, the rule-of-thumb works relatively well.

The first thing we can do is remove words which appear very infrequently in the dataset, say in less than 0.5% of the samples. We can do this by setting the parameter min_df to 15 when initializing our TfidfVectorizer. Let's go ahead and re-initialize our BOW with this in mind.

vectorizer = TfidfVectorizer(min_df=15)

bow = vectorizer.fit_transform(df['Reviews'])

len(vectorizer.get_feature_names())

309

That worked well as doing that alone brought us down to ~300 features - we're approaching acceptable territory. For the sake of thoroughness, however, lets apply a more disciplined feature selection approach to remove common, "noisy" features that aren't likely to tell us a lot about the sentence's sentiment (like the word "the"). We'll use an sklearn implementation of the Chi-Squared test for this.

from sklearn.feature_selection import SelectKBest, chi2

# select the 200 features that have the strongest correlation to a class from the

# remaining 308 features.

selected_features = \

SelectKBest(chi2, k=200).fit(bow, labels).get_support(indices=True)

Note that the .get_support() method is used, which returns the indices of the features selected. We could use .fit_transform on SelctKBest to create a new BOW right away, but this would result in quite the black box (We would have a new BOW, but we wouldn't know what features where selected to place in that BOW).

Now we have a list of selected features. We'll use them to once again create a new vectorizer and BOW.

vectorizer = TfidfVectorizer(min_df=15, vocabulary=selected_features)

bow = vectorizer.fit_transform(df['Reviews'])

bow

3000x200 sparse matrix of type with 11889 stored elements in Compressed Sparse Row format

Splitting the Dataset: The Train and Test Sets

Now that our dataset has been filtered down to a manageable size, we can start trying to train a model. The first step is to split our dataset into a training and testing set. We'll use the training set to build the model, and the testing set to evaluate its performance.

It is important that you test your model on data it has never seen before - training and testing the model on the same data might make for a good memory test, but it won't tell you a lot about how the model will perform when real-world data starts hitting it.

Before we deploy the model to the real-world, we'll recombine the train and test sets and re-train the model on the entire dataset.

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(bow, labels, test_size=0.33)

The above code takes a random, 2/3 slice of our BOW and the parallel list of labels, and assigns that slice to X_train and y_train, respectively. We will use this slice to train our model. We'll set the remaining 1/3 of the dataset to the side for now.

The Classifier

from sklearn.ensemble import RandomForestClassifier as rfc

A Random Forest is selected as our model algorithm. Random Forests are collections of decision trees. When a sample passes through the random forest, each decision tree makes a prediction as to what class that sample belongs to (in our case, negative or positive review). Once this is done, the class that got the most predictions (or votes) is chosen as the overall prediction.

Individual decision trees (especially unpruned trees) are not very robust to new data: they are prone to overfitting. A model that overfits its dataset will over-remember trends or features present in the dataset, and will be caught off guard when those trends change with new data. This is because real world data is frequently "noisy" - small trends might appear due to randomness, but because they are random, it's not actually a trend.

For example, lets say you want to build a model to predict if a student will ace a class, and you've collected some historical data on student profiles and class outcomes. You build your classifier, and it achieves 85% accuracy on the testing set. When you apply that same classifier to the real world, your accuracy drops to 70%. Why the large decrease? Well, lets say that by random chance, the average height of your dataset's successful student was 5.9ft (1.8m). 3% of those students went by the name of "Angela". The model picks up on that, and comes to the conclusion that a student is more successful if they are 5.9ft tall and named Angela. When exposed to the real world, the distribution of 5.9ft Angela's is less than that of the dataset's, and the model's performance takes a dive.

A good model will fit the training data well enough to pick up on sure-fire trends, but not so well that it picks up on frivolous noise. You also want to avoid underfitting, in which you miss out on important trends. In reality, a model almost never performs as well on real-time, real-world data as it does on the testing set, as it is usually difficult to perfectly balance over and underfitting.

classifier = rfc()

classifier.fit(X_train,y_train)

classifier.score(X_test,y_test)

0.7494949494949495

If you've been following along, congrats. You have a functioning sentiment analyzer for customer product reviews. We're not quite done yet, though, as we can do a little bit more work to bump that score up.

Hyperparameter Optimization: Maximizing Performance

A hyperparamemter is any model parameter you define. You can think of hyperparameters as the classifier's settings or options menu. They are distinct from the model's general parameters (the weighting/importance given to certain features, or other trends the model finds to fit the data), which are defined automatically by the algorithm as the model fits the training data. In other words, parameters are defined during training (by the model), while hyperparameters are defined before training (by you or some hyperparameter selection algorithm). There are some exceptions to that (particularly in deep learning), but for now, our definition is sufficient.

Our first classifier used the default hyperparameter settings defined by sklearn. We may be able to do better by trying some other hyperparemter options. We'll do so via hyperparameter selection

Hyperparameter selection consists of training and testing multiple models with different hyperparameters and selecting the model that scores the highest. Some popular methods of hyperparameter selection include: grid search, also known as brute-force search, which tests different combinations of hyperparameters in an organized fashion (generally slower, but likely to find a highly optimal model), random search, which test models with random hyperparameter combinations, and genetic algorithm search, which "evolves" a set of hyperparameters over several generations to produce better and better models. We'll use (random search), the simplest yet very effective method, to generate 65 random models.

from sklearn.model_selection import RandomizedSearchCV

from scipy import stats

classifier = rfc()

hyperparameters = {

'n_estimators':stats.randint(10,300),

'criterion':['gini','entropy'],

'min_samples_split':stats.randint(2,9),

'bootstrap':[True,False]

}

random_search = RandomizedSearchCV(classifier, hyperparameters, n_iter=65, n_jobs=4)

random_search.fit(bow, labels)

RandomizedSearchCV will make parameter selections within these distributions. These parameters will define our new, optimized classifier By default, RandomizedSearchCV uses 3-fold cross validation, meaning each model is trained and tested on 3 different train/test splits.

sklearn's implementation of random search allows for CV, or cross validation. Cross validation further splits the training set into multiple train/test splits. Each candidate model is then trained and evaluated multiple times. The model with the highest average score on the CV splits is then selected. By using cross validation, we can reserve our test split for a final check on the chosen model.

Let's retrieve the best-performing classifier from our random_search, and see how it does on our testing set.

optimized_classifier = random_search.best_estimator_

optimized_classifier.fit(X_train,y_train)

optimized_classifier.score(X_test,y_test)

"

0.7626262626262627

By just randomly sampling hyperparemeters for 65 models, we managed to push our score a few points higher. Setting n_iter to a larger value than 65 might result in generating an even better model - might, because it's still random. (Note that your results are likely to vary slightly, due to randomness introduced in the random forest, the random search, and the random shuffling/splitting of the dataset.

Results analysis

We're nearly at the end of our long journey. Before we part ways, let's gather some insight into why our model is performing at the level it is. We'll start by having some fun with our new toy - we'll retrain our classifier on the full dataset, and pass some reviews we write through it.

optimized_classifier.fit(bow,labels)

our_negative_sentence = vectorizer.transform(['I hated this product. It is \

not well designed at all, and it broke into pieces as soon as I got it. \

Would not recommend anything from this company.'])

our_positive_sentence = vectorizer.transform(['The movie was superb - I was \

on the edge of my seat the entire time. The acting was excellent, and the \

scenery - my goodness. Watch this movie now!'])

optimized_classifier.predict_proba(our_negative_sentence)

array([[0.84355159, 0.15644841]])

optimized_classifier.predict_proba(our_positive_sentence)

array([[0.11276455, 0.88723545]])

The outputs above are formatted as [probability of negative, probability of positive]. It seems the classifier got both of our reviews right, giving our negative sentence an 84% chance of being negative, and our positive sentence an 89% chance of being positive. Let's try something a little harder, now.

our_slightly_negative_sentence = vectorizer.transform(["The product was okay. \

I've ordered better in the past, and overall, I'd probably recommend a different \

product line if you're new to these. The company is good, though, and they do \

have some excellent products. This product isn't really one of them."])

our_slightly_positive_sentence = vectorizer.transform(["The back end of the phone \

fell off upon delivery - a testament to its cheap, plastic build. After 6 months \

of continued use, however, I must say this product is incredible bang for your \

buck. It's pretty good, and you'd be hard pressed to find something similar for \

this thing's low cost."])

optimized_classifier.predict_proba(our_slightly_negative_sentence)

array([[0.1031746, 0.8968254]])

optimized_classifier.predict_proba(our_slightly_positive_sentence)

array([[0.6274093, 0.3725907]])

For reviews that tread the boundary of positive and negative, our classifier has a much harder time. Let's dig into our dataset a bit, look at samples that were incorrectly classified, and see if we can confirm that take-away.

optimized_classifier.fit(X_train,y_train)

correctly_classified = {}

incorrectly_classified = {}

for index, row in enumerate(X_test):

probability = optimized_classifier.predict_proba(row)

# get the location of the review in the dataframe.

review_loc = y_test.index[index]

if optimized_classifier.predict(row) == y_test.iloc[index]:

correctly_classified[df['Reviews'].loc[review_loc]] = probability

else:

incorrectly_classified[df['Reviews'].iloc[review_loc]] = probability

Misclassified Samples

for review, score in incorrectly_classified.items():

print('{}: {}'.format(review, score[0]))

print('-----')

That's right....the red velvet cake.....ohhh this stuff is so good.:

[0.50008503 0.49991497]

-----

Again, no plot at all. : [0.52423469 0.47576531]

-----

Doesn't do the job.: [0.6735395 0.3264605]

-----

Penne vodka excellent!: [0.84047619 0.15952381]

-----

The Han Nan Chicken was also very tasty.: [0.54190239 0.45809761]

-----

I found the product to be easy to set up and use.: [0.5163053 0.4836947]

-----

We have gotten a lot of compliments on it.: [0.3891861 0.6108139]

-----

I found this product to be waaay too big.: [0.37018315 0.62981685]

-----

i felt insulted and disrespected, how could you talk and judge another human being

like that?: [0.46852324 0.53147676]

...

Correctly Classified Samples

for review, score in correctly_classified.items():

print('{}: {}'.format(review, score[0]))

print('-----')

The last 3 times I had lunch here has been bad.: [0.89693878 0.10306122]

-----

Our waiter was very attentive, friendly, and informative.: [0.18739607 0.81260393]

-----

The interplay between Martin and Emilio contains the same wonderful chemistry we

saw in Wall Street with Martin and Charlie. : [0.20173847 0.79826153]

-----

Go To Place for Gyros.: [0.39796863 0.60203137]

-----

Everything was fresh and delicious!: [0.04166667 0.95833333]

-----

I love this cable - it allows me to connect any mini-USB <a href="/ssds-compatible-devices/pc">device to my PC</a>.:

[0.08222789 0.91777211]

-----

This is simply the BEST bluetooth headset for sound quality!:

[0.06885359 0.93114641]

...

It seems like among the correctly classified samples, there are many "key words" (the 200 features that we selected for our vectorizer like "delicious" and "love") compared to incorrectly classified samples.

Conclusion

This tutorial is a first step in sentiment analysis with Python and machine learning. The example sentences we wrote and our quick-check of misclassified vs. correctly classified samples highlight an important point: our classifier only looks for word frequency - it "knows" nothing about word context or semantics. For that, something like an n-gram BOW approach might prove beneficial. That's a bit out of the scope of this article, however. We could also change the probability threshold: at the moment, anything calculated as more than 50% likely to be positive is predicted as a positive review. Changing that threshold to, say, 60%, might help.

For now, we've managed to go from a text file to a classifier that, with a bit of work, could help you automate many things (for instance, automate your holiday shopping on Amazon).

Share